Self-driving cars, care bots and surgical robots are on their way. These artificial intelligence systems could make our roads safer, the lives of people with disabilities or the elderly easier, and patient recovery faster. The promises of artificial intelligence (AI) are huge, and their possibilities endless. But can we really trust machines with our lives? If they act on our behalf, will they do so in a responsible way? If something goes wrong with AI, who is to be held responsible? What happens when we fail to tackle bias and discrimination? At Utrecht University we work to design better, more responsible and trustworthy AI systems. That involves teaching machines how to reason and justify decisions, just as we would, but also solving some of the most pressing ethical and legal challenges AI presents.

Unboxing the black box of AI

Estimated reading time: 15 minutes

Here’s a thought experiment: You’re standing by the tram stop when you catch sight of a runaway trolley rushing down the tracks towards five people who don’t see it coming. Now imagine you could divert the trolley onto a second track, which has just one person on it. What do you do? Would you sacrifice the one to save the five?

The trolley problem has been a classic dilemma in ethics or philosophy courses for decades. “Suddenly, as experimental self-driving cars started hitting the roads, we were faced with a real-world dilemma: what should the AI system be programmed to do in such a life-or-death situation?” poses philosopher Sven Nyholm from Utrecht University. “We will want to know before entrusting our safety to it.”

What if an autonomous car injures or kills someone? Who should be held responsible?

Knowing how AI systems reach decisions isn’t always clear or straightforward. Many algorithmic systems are a ‘black box’: information goes in, and decisions come out, but we have little idea how they arrived at those decisions.

“So, what if an autonomous car injures or kills someone? Who should be held responsible: Is it the distracted person sitting behind the self-driving wheel? The authorities that allowed the car on the road? Or the manufacturer who set up the preferences to save the passenger at all costs?” raises Nyholm, who penned an influential paper about the pressing ethics of accident-algorithms for autonomous vehicles. “People want someone to blame, but we haven’t reached a universal agreement yet.”

As a society, discussing what these artificial intelligent systems should or should not do is essential before they start crowding our roads – and virtually every other aspect of our lives. Because how can we ever trust systems whose decisions we don't understand? How will we account for wrongdoings? And can they be prevented? Since the 1980s, Utrecht University has been building a diverse community of experts to ‘unbox’ artificial intelligence to allow the promises of this technological revolution to emerge.

“What makes artificial intelligence so impactful (and promising) is that it gives computers or machines the ability to model, simulate or even enhance human intelligence. AI systems can sense their environments, learn and reason to decide appropriate actions to achieve their designed objectives,” says Mehdi Dastani, programme leader of the Master in Artificial Intelligence at Utrecht University. He adds: “It is precisely the ability to learn from experience accrued from data that is so central to many of the revolutionary applications of AI today.” It’s the reason Google translations are now finally decent or why Gmail successfully filters 99.9% of spam. But it's essential to ensure that humans remain in control of the AI technology, says Dastani, and distinguish between the good, the bad and the ugly.

The good – using AI to treat disease

A virtual sleep coach for sufferers of insomnia, a game for children with chronic illness, or a robot for nudging social distance to prevent the spread of the coronavirus. Beyond the promise of convenience and work efficiencies, AI can help save lives. “AI systems can help detect abnormalities in patients’ medical images that can escape even the best physicians,” says Kenneth Gilhuijs, Associate Professor at University Medical Centre Utrecht’s Image Sciences Institute. Gilhuijs has developed a computer-aided diagnosis programme that might soon help doctors detect breast cancer earlier, faster, and more accurately than is possible today.

Our AI-powered system can reduce the number of false positives down to 64% without missing any [breast] cancer.

The idea came after a study, led by his colleague and cancer epidemiologist Carla van Gils, suggested adopting Magnetic Resolution Imaging (MRI) in screening of women with extremely dense breasts could help reduce breast-cancer mortality in this group. Adding MRI to standard mammography detected approximately 17 additional breast cancers; the downside being, 87.3% of the total number of lesions referred to additional MRI or biopsy were false-positive for cancer. “If the number of biopsies on benign lesions can be reduced, that would alleviate the burden on women and clinical resources,” Gilhuijs thought.

“We have now shown that the AI-powered system can reduce the number of false positives down to 64% without missing any cancer,” says Gilhuijs, in whose lab the algorithm was trained on a database of MRIs from 4,873 women in The Netherlands who had taken part in Van Gils’s study. “We want to improve it even further to aid radiologists, by enabling them to focus on cases that require greater human judgement.”

Oncologists are still responsible for the diagnosis, Gilhuijs remarks, but “they use the computer as a tool, just as they might use a statoscope. It’s also important to note that the end users of the decision-support system –medical doctors– have been actively involved in its design. This ensures better acceptance and trust.”

The bad – using AI to overrule human decisions

But what happens when users aren’t involved and don’t know how an AI ‘second opinion’ came about?

What could happen is an affront to people’s rights, says legal scholar at Utrecht University Max Vetzo. That’s what happened when a judge in Wisconsin relied on an algorithmic tool called COMPAS to decide on the sentence of a man who was found driving a car involved in a shooting. The algorithm in question classified the defendant as a ‘high risk’ of recidivism, so the then 35-years old was sentenced to six years of prison. Vetzo: “Fundamental rights law guarantees defendants to rebut and fight a judge’s decision. In this case, when the lawyers appealed the decision, the judge couldn’t explain how the system reached that outcome.”

That’s not to say that the sentence was wrong, or that algorithmic tools can’t be used to positive effect, Vetzo notes. “AI can be a valuable ‘sparring partner’ for judges, and even reduce biases in the criminal-justice system,” he says. “But it’s the judges’ responsibility to ensure the transparency of the judicial process. They should, at least, be able to understand how these algorithmic systems reach decisions, and even help assess what legal problems arise from using AI. If not, how can a person’s fundamental right to a fair trial be safeguarded?,” says Vetzo, co-author of Algorithms and Fundamental Rights (in Dutch), an evaluation of the impact of new AI technologies, specifically in The Netherlands, on fundamental rights such as privacy, freedom of expression or the right to equal treatment.

AI can overrule human decisions in more surreptitious ways. “We found that algorithms that are connected to home applications, such as smart TVs or digital assistants, which may cause a ‘chilling effect’, where people adjust their behaviour knowing that they can be monitored. That is a great concern for privacy. Or our view of the world can be reduced, because of algorithms that select content based on online habits, as with music recommendation algorithms that end up being unfair to female artists.”

And the ugly – coded bias

But is it fair to blame it all on the technology? “We need to keep in mind that AI systems are not neutral systems,” says philosopher Jan Broersen, who chairs Utrecht University’s Human-Centred Artificial Intelligence research group together with computer scientist Mehdi Dastani. “Behind AI are people who express personal ideals of what a good outcome is or what success means, their values, preferences and prejudices, and all of this will be reflected in the system.”

Broersen illustrates: Say you’re developing a program to identify pets. If you train the algorithm on a million images of dogs because you find them cuter or friendlier, but only a few thousand pictures of cats, the algorithm’s idea of what a cat looks like will be less fully formed, and therefore it will be worse at recognising them. “AI can be biased because it makes decisions based on data or training choices that contain our own human biases,” Broersen explains.

Another, bigger problem is when data sets that feed machine learning algorithms are based on historic discrimination. “Then, there’s a risk of not only reproducing structural inequalities, but even amplifying them.” Think of an algorithm trained to select candidates for a job: if most candidates have been men in the past, the algorithm is likely to reproduce that pattern and end up discriminating women as a result.

Examples of coded bias are everywhere; some close to home, such as the algorithms used by the Dutch Tax Authorities that led to racial profiling (widely known as the ‘toeslagenaffaire’). “In one important way,” Broersen adds, “algorithmic biases are bringing to the fore prejudices that were rampant in job hiring processes, credit worthiness evaluations or government applications. But once we become aware of them, machines are more easily de-biased than most humans are. It’s an opportunity for researchers of AI to do better.”

We can devise methods that help us reveal biases in AI.

Explainable AI

So what are researchers at Utrecht University doing to create responsible and inclusive systems that we can trust? “If AI systems operate in a black box, we have no insight into how to fix the system if there’s a mistake,” says Natasha Alechina, coordinator of the Autonomous Intelligent Systems sub-group at Utrecht University, and a specialist in multi-agent systems. “Look at autonomous vehicles. We need to verify that the system is safe to drive, but also that their decision-making behaviour is explainable. Not only for passengers to determine whether they feel comfortable travelling in one, but also for AI builders to be able to optimise it if something gets damaged or someone gets injured.”

Explainable artificial intelligence means the reasoning behind a decision should be comprehensive for its human users. Computer scientist Floris Bex and his team at the National Police-lab AI have designed an explainable AI system to help the Dutch National Police assess crime reports more efficiently. “Of the 40,000 complaints citizens file every year, only a small percentage are considered a criminal offense by law, and therefore processed. If an automated system fails to explain why a complaint has been rejected, it might undermine public trust. We therefore designed our crime reporting tool with a chatbot that handles natural language and reasons with legal arguments so that citizens receive an understandable and legally sensible explanation for why their report won’t be processed.”

To be clear: an explainable system can still be prone to bias or errors. “Actually, we may never get completely bias-free AI models,” admits Dong Nguyen, Assistant Professor of Natural Language Processing at Utrecht University. “What we can do is devise methods that help us reveal biases in AI, so that those affected by a decision can challenge their outcome.” One method Nguyen is particularly keen on is making algorithmic models publicly available for scrutiny and evaluation. She is also working on a visualisation tool that allows tracing back what the models have learnt in order to easily pick up aspects that are responsible for bias. “Suppose an algorithm rates a restaurant poorly. If the explanation reveals that its decision was based on a problematic variable, like a foreign sounding name, instead of the restaurant's service, the owner could rebut the decision.”

Algorithmic accountability

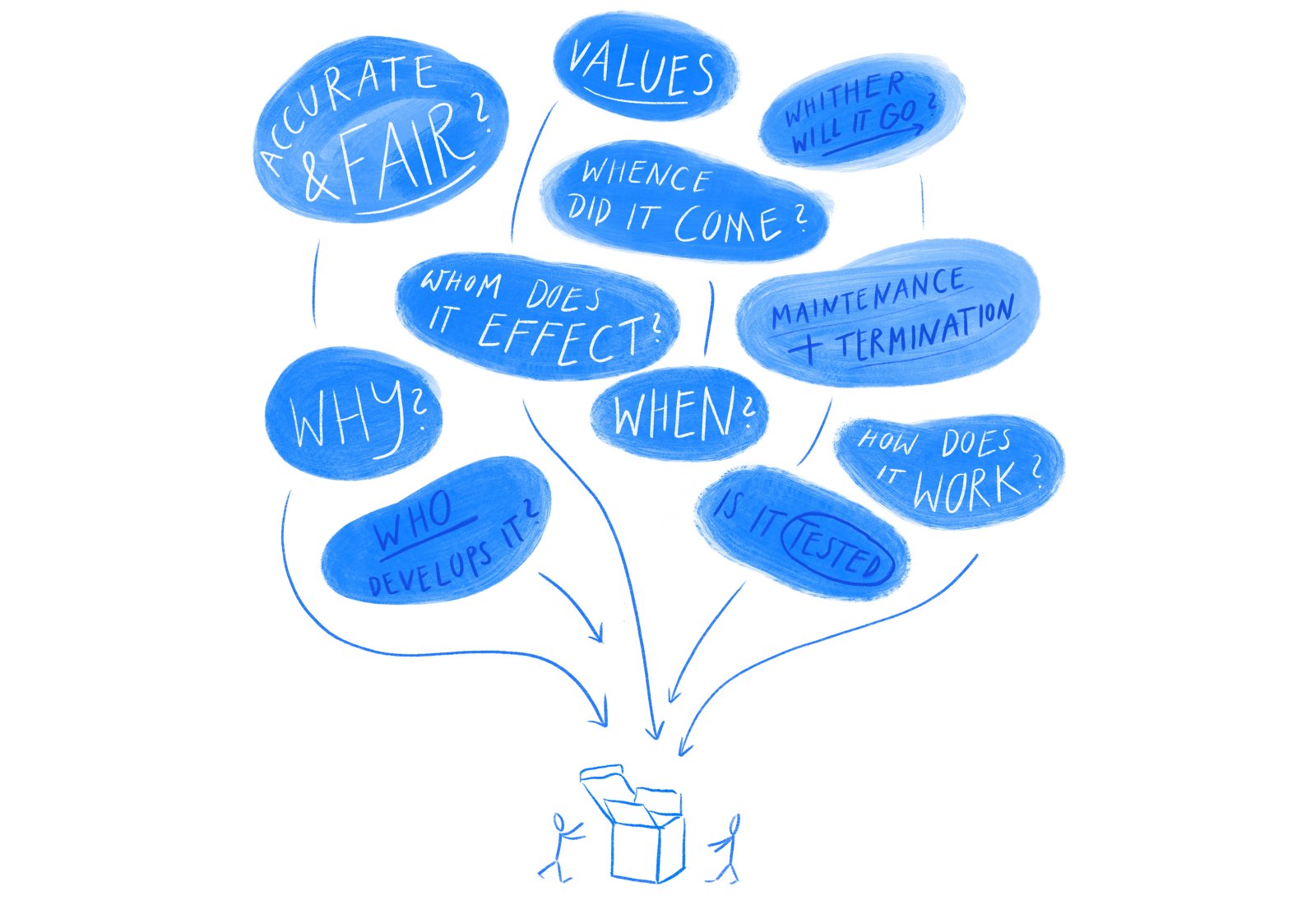

While explainable AI can help us understand how a system arrived at a decision; in itself, it’s not enough to prevent wrongdoings. “We need to make everyone involved in the development or deployment of algorithms to account for the systems’ impact in the world,” says media scholar Maranke Wieringa, who researches algorithmic accountability. “Designing accountability in AI requires us to ask critical questions: who designed what, why, in what context, in what period, how does it work, whom does it affect, whither the system goes, and whence it came?”

Wieringa is now developing a tool - BIAS - with colleagues from Utrecht Data School and in collaboration with Dutch municipalities that urges civil servants to consider the impact of algorithmic systems on their communities even before implementing them, such as the implications of using algorithms to predict how likely someone is to commit fraud over benefits or pay back a loan. Wieringa coaxes question after question, in a vivid illustration of the stream of thoughts this process entails: “How are we safeguarding non-discrimination in our system? Why is it legitimised? Who is responsible if the system does not work properly? How much error rate is acceptable? Did we consider alternatives to AI? And if so, why were they rejected?”

Wieringa draws from experience developed at the Utrecht Data School with their Data Ethics Decision Aid (DEDA); a toolkit that helps data analysts, project managers and policy makers recognize which ethical issues may be at stake in governmental data projects. “By facilitating a deliberation process around which values are important to the organisation – efficiency, openness or legitimacy, for example-, DEDA stimulates value-sensitive design and changes in governance to respond responsibly to the ethical challenges constituted by algorithms,” says Mirko Schaefer, co-founder of Utrecht Data School. “Many municipalities and ministries in the Netherlands are already using DEDA to implement data and algorithms responsibly.”

Practically, says Schaefer, this participatory process enables users of algorithms to document their choices, so that values (and biases) baked into the system can be traceable now and in the future. “Algorithms are situated in a context and a place, but our norms and values change. What we found acceptable 50 years ago may be unacceptable now. So tools like BIAS and DEDA help organisations review their choices over time and adapt their design accordingly.” Together with colleagues from Utrecht Data School, Schaefer and Professor of Fundamental Rights Janneke Gerards have launched an Impact Assessment for Human Rights and Algorithms (IAMA) aimed at preventing human rights violations, such as the ‘toeslagenaffaire’.

Forward-looking responsibility

Self-driving cars are one such example where the law can't keep up: all traffic laws assume there’s a human doing the steering and the breaking. Rather than leaving it to regulatory catch-up, researchers in Utrecht are getting ahead by thinking of how we can design autonomous systems now to keep them from behaving wrong in the future – including in situations we can't even anticipate today.

Deontic logic can give us a basis for machine ethics.

One such instrument is deontic logic, says Jan Broersen, who’s leading a project to prepare intelligent systems to behave according to ethical and legal principles. “Deontic logic is about how to reason correctly with norms, that is, with obligations, permissions and prohibitions that are part of a moral or legal code. It could inform us about when it is appropriate for a self-driving car to violate traffic laws in order to prevent greater harm, for example when somebody has to be rushed to the hospital in order to save their life. If deontic logic can give us models for how to correctly draw conclusions on what an AI must do or is not allowed to do, given certain observations as inputs, we have a basis for machine ethics. And we can have more confidence in such (deontic) logic-based models than ones that need to learn how to behave ethically through machine learning.”

With the pace of change, innovative forms of regulation are also needed, adds Pınar Yolum, Associate Professor of Intelligent Systems. “Our traffic laws may need to change as self-driving cars will be able to talk to each other and make decisions, for example, by negotiating who goes first at a crossing. That requires these AI systems to do something very human: use argumentation to negotiate and persuade.” Yolum is investigating how to train devices so that they can take decisions on our behalf, knowing our preferences over privacy.

Incidentally, this line of research invites a reflection about the process of trusting these systems. “We delegate responsibility to other people all the time! How do we get to trust them? By giving them small tasks. I sometimes compare this with children: ‘Ok, you can go to the park across the street. Then the next day you can go to the shop around the corner. Maybe a year later, you can go downtown.’ You build trust over these interactions and their results. Same with autonomous agents. That’s how I envision the relationship between humans and autonomous systems in the future.”

Diverse skills and people

Finally, if we are to trust that AI is moving in the right direction, we need to bring in everyone: different skills and diverse teams, that can check on each other’s biases to produce a more inclusive output – and that starts with our education. “We’re equipping a new generation of AI professionals with interdisciplinary skills and a moral compass for the social context in which technologies evolve,” says Mehdi Dastani, programme leader of the Master’s programme in Artificial Intelligence and co-chair of the Human-Centred Artificial Intelligence group at Utrecht University. For one main reason: “AI powering our future intelligent (autonomous) systems is much more than learning from patterns extracted from big data. It is, above all, about rich representation systems, correct reasoning schemes, and about modelling intelligence that approach problems and tasks efficiently and accurately. Those models can benefit from scientific knowledge accrued over time from different scientific disciplines, such as psychology, philosophy, linguistics, and computer sciences. But also from different cultural backgrounds and perspectives.”

We’re equipping a new generation of AI professionals with interdisciplinary skills and a moral compass.

At Utrecht University we work to make better AI: developing explainable systems so that users can understand or challenge their decisions and outcomes; programming responsible machines so that if something goes wrong or bias creeps in, organisations can redress it; or interacting with robots so that we create human-centred machines that embed our norms and values. We also educate the next generation to be creative about the smart systems of the future, while staying wary to prevent harmful advances or misuses of the technology. And we practice inclusive AI, by making interdisciplinary collaboration a trademark of our research and education. Together, we are reimagining a better future – for both humans and machines.